Search Engine Visibility

Before your content is viewed by people online, it must first pass through search engines crawlers. You need to clearly understand this process. Let’s take this one step at a time.

First, search engines must be able to discover and navigate your website. Second, search engines will index your webpages.

In order to entice search engines to present your webpages when relevant queries are entered, we must first optimize the following:

Internal Links & File Structure

Organized internal links & organized file structure for successful discovery & navigation of your website by crawlers/robots.

Robot.txt File & Indexing Instructions

Create robot.txt file for special crawling & indexing instructions to search engine crawlers/robots on how to crawl and index your site.

Unique URL's & Redirects

Elimination of duplicate content & duplicate URL’s

XML Site Map

Submit an XML site map of your site to ensure search engines know where your webpages are and how they can be found.

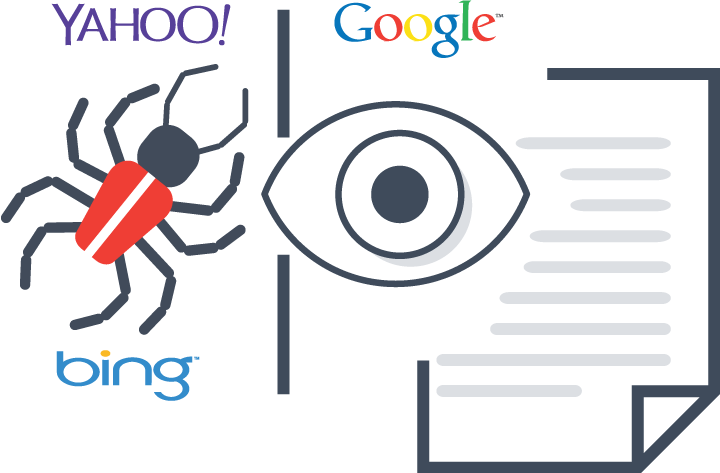

Internal Links & File Structure

Did you know that without quality internal links & organized file structure, search engines won't crawl your website properly?

Internal Links & File Structure

We optimize your website’s structure in order to make it easier for search engines to crawl and navigate your site. In order for your site to show up during relevant searches, the search engine must first crawl your website to know how your website is structured and what your content is about.

XML Site Map

How will search engines present your pages to search queries if they don't have a road map of your website?

XML Site Map

The second way search engines discover your content is through an XML site map. An XML site map is a listing of your webpages in a special format that is easily read by search engine crawlers/robots.

Every site must have a site map, and every time your content is updated we submit an updated site map to search engines.

Unique URL's & Redirects

After your site has been discovered & crawled by search engines, your site's web pages get indexed to their URL’s.

Unique URL's & Redirects

The URL is your site's web address. It’s important that each of your webpages have a single unique URL in order for search engines to differentiate the content for each page. This means that your content must never have duplicate URL’s

How do we avoid duplicate URL’s?

- By adding REL Meta Tags to your pages will tell search engines, which is the preffered URL to access the content, in case duplicate URL’s exist.

- By adding canonical Url tags with the exact file path.

How do we eliminate duplicate content?

- Duplicate content is caused when content is moved from one page to another, changes in page name, website names or changes in content management systems. There are many causes of duplicate content. Those are just a few.

- We eliminate duplicate content by setting redirecting rules with 301 permanent redirects, and 302 temporary redirects. With these redirect rules, we tell search engines that if previous URL’s are accessed online that they must automatically redirect to the new URL